This is a cross-post from the website of the Happier Lives Institute.

TL;DR: We estimate that StrongMinds is 12 times (95% CI: 4, 24) more cost-effective than GiveDirectly in terms of subjective well-being. This puts it roughly on a par with the top deworming charities recommended by GiveWell.

[Edit 26/10/2021: Table 4 and accompanying text added]

1. Background and summary

In order to do as much good as possible, we need to compare how much good different things do in a single ‘currency’. At the Happier Lives Institute (HLI), we believe the best approach is to measure the effects of different interventions in terms of ‘units’ of subjective well-being (e.g. self-reports of happiness and life satisfaction).

In this post, we discuss our new research comparing the cost-effectiveness of psychotherapy to cash transfers. Before we get to that comparison, we should first highlight the advantage of doing it in terms of subjective well-being; to illustrate that, it will help to flag some alternative methods.

We could assess the effect each intervention has on wealth, but this would fail to capture the benefits of psychotherapy. It’s implausible to think that treating depression is only good insofar as it helps you to earn more. We could assess their effects using standard measures of health, such as a Disability-Adjusted Life-Year (DALY), but it’s similarly mistaken to think that alleviating extreme poverty is only good insofar as it helps you to become healthier. We could make some arbitrary assumptions about how much a given change in income and DALYs each contribute to well-being; this would allow us to ‘trade’ between them. But this would just be a guess and could be badly wrong. If we measure the effects on subjective well-being, how individuals feel and think about their lives (e.g. "Overall, how satisfied are you with your life, nowadays?" 0-10), we can provide an evidence-based comparison in units that more fully capture what we think really matters.

Efforts to work out the global priorities for improving subjective well-being are relatively new. Nevertheless, the recent push to integrate well-being in public policy-making in countries such as Scotland and New Zealand, as well as the reach of publications such as the World Happiness Report (which started in 2012), indicates that this is a viable approach.

Earlier work conducted by HLI’s Director, Michael Plant, suggested that using subjective well-being might reveal different priorities for individuals and organisations seeking to do the most good, with mental health standing out as one area that is crucial and potentially neglected. Plant’s (2018, 2019 ch. 7) prior back-of-the-envelope calculations indicated that StrongMinds, a mental health charity that treats women with depression in Africa, could be as cost-effective as GiveWell’s top charity recommendations.

These initial findings motivated us to do a much more rigorous analysis of the same interventions in terms of subjective well-being, so we undertook meta-analyses in each case. These aimed to address three questions:

- Is assessing cost-effectiveness in terms of subjective well-being feasible: are there enough data that we can make these sorts of comparisons without making major assumptions to fill in the blanks?

- Is this approach worthwhile: does it indicate new or different priorities?

- Does this specific comparison between cash transfers and psychotherapy indicate that donors and decision-makers should change the way they allocate their resources, assuming they want to do the most good?

Our research focused specifically on studies in low- and middle-income countries (LMICs). Our meta-analyses consist of 45 studies (total participants=116,999) for cash transfers and 39 studies (total participants=29,643) for psychotherapy. We assessed their cost-effectiveness using measures of subjective well-being (SWB) and mental health (MHa), which we combined (see section 2.2 for more details).

We estimate that the average psychotherapy intervention in our dataset would be 12 times (95% CI: 4, 27) more cost-effective than the average monthly cash transfer. We used this wider evidence base to estimate the cost-effectiveness of two charities that are highly effective at implementing each type of intervention: Give Directly (which provides $1,000 lump-sum cash transfers) and StrongMinds (which provides psychotherapy). When we repeated the analysis for these specific charities, adjusting for how they differed to the average intervention of their type, we found that StrongMinds is 12 times (95% CI: 4, 24) more cost-effective than GiveDirectly.

A few aspects of this research are worth highlighting. First, this meta-analytic approach is much more empirically rigorous than the back-of-the-envelope calculations made in Plant (2018, 2019); we can take these results seriously, rather than as merely indicative. Second, meta-analyses only tend to report the initial effects of the intervention. We estimated the effects over time and the average costs which means we can calculate cost-effectiveness (which is not possible just with initial effects). Third, we used Monte Carlo simulations to generate our estimates. Rather than using a single number for each part of the cost-effectiveness analysis (e.g. a single number for cost) Monte Carlo simulations involve specifying a range of possible values for each input, then recalculating the results over and over, using a randomly drawn number for each input. This allows us to better account for uncertainty and to assign confidence intervals to our final cost-effectiveness estimate.

What are the main conclusions to draw from this new analysis? Let’s return to the three stated aims in turn.

First, such an analysis was feasible. There was more than enough data for a meta-analysis, although we did have to pool ‘classic’ subjective well-being measures (happiness and life satisfaction) with mental health measures (see section 2.2). Aggregating in this way has some precedent (Luhmann et al., 2012; Banerjee et al., 2020; Egger et al., 2020; Haushofer et al., 2020).

Second, performing cost-effectiveness analysis in terms of subjective well-being does seem worthwhile. We found that StrongMinds was 12 times more cost-effective than GiveDirectly; some grantmakers, including Open Philanthropy, consider GiveDirectly to be "the bar" for allocating funds. What's more, this 12x multiple puts StrongMinds roughly on a par with GiveWell’s top-rated life-improving charities - these mostly focus on deworming. Although GiveWell recommends GiveDirectly, it estimates it to be 10-20x less cost-effective than it’s top deworming charities, in terms of years of doubled consumption. Hence, if we take these multiples at face value, StrongMinds is in the same ballpark as the top-rated life-improving interventions.

The third aim of this research was to determine if donors should allocate their resources differently. The picture here is more complicated. As noted, taking the multiples at face value, StrongMinds is on a par with GiveWell’s top life-improving interventions, it is not more cost-effective. However, we have not yet looked deeply into deworming ourselves and we would not be surprised if doing so indicated substantially different conclusions.

We suspect that deworming will turn out to be less effective than GiveWell currently estimates (relative to cash transfers and in terms of subjective well-being). In GiveWell’s model of the effect of deworming, its benefits come almost entirely from improved educational attendance which, in turn, causes a small annual increase in income for many years. Hence, although it is a health intervention, the effect comes through reducing poverty. One issue is that a small, annual income increase may have less effect on well-being compared to a single, potentially life-changing lump sum, even if the total increase in lifetime income is the same (the $1,000 lump sum given by GiveDirectly is equivalent to a year of household income). We have raised this concern with GiveWell and you can read the relevant section of our conversation notes here. In our meta-analysis of cash transfers, we find that monthly transfers are about 2.5 times less cost-effective than large lump sums, which suggests that a non-trivial downward adjustment to deworming (relative to cash transfers) may be appropriate. However, it's also possible we will conclude that deworming has a more substantial and positive impact on health than GiveWell currently models, which would raise its cost-effectiveness.

For any donors who need to make an immediate decision, our weakly-held view is that StrongMinds is more cost-effective than any of GiveWells’ top life-improving charities. We plan to conduct further analysis of deworming over the next few months and expect to be able to make a more confident claim then.

Given all this, we believe it is both feasible and worthwhile to investigate more interventions in terms of subjective well-being. As well as deworming, we have several interventions that we’re excited to look at. Based on our shallow analyses so far, we think there’s a good chance these interventions will be more cost-effective than psychotherapy. They run the gamut of ‘micro’ interventions, such as providing clean water, cement flooring, and cataract surgery, to ‘macro’ interventions, such as advocating to integrate well-being metrics in public policy to improve institutional decision-making.

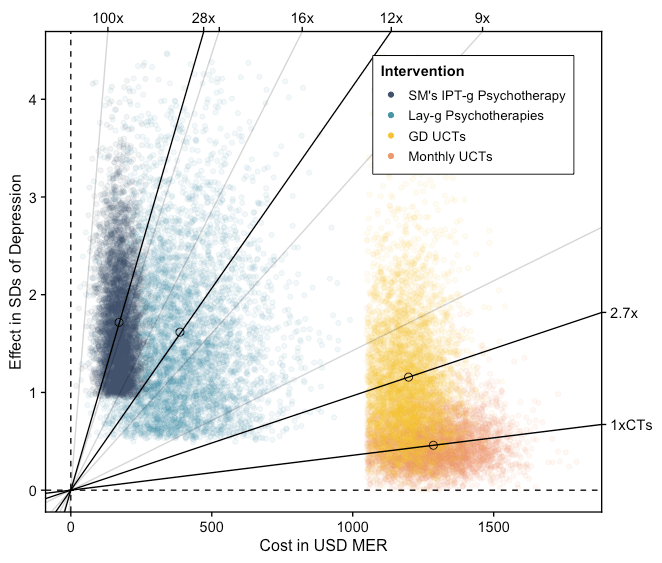

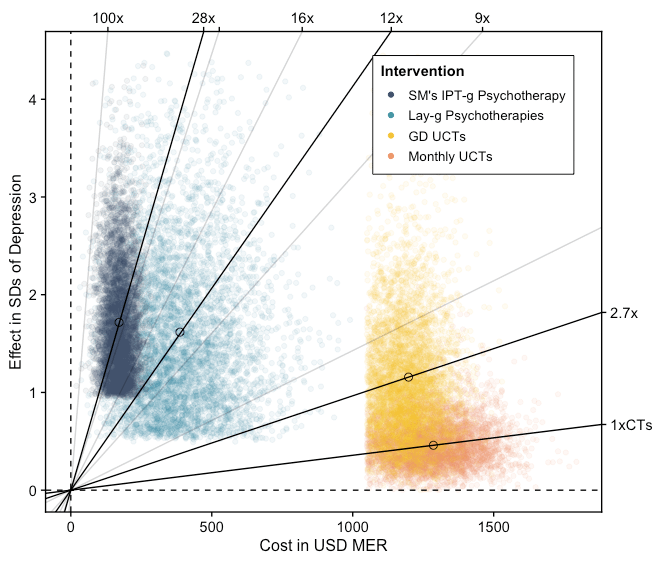

The following sections of this post go on to summarise our three in-depth cost-effectiveness analyses: one on cash transfers (which includes our analysis of GiveDirectly); one on psychotherapy in general; and one on StrongMinds specifically. Our results are summarized below in Figure 1, where the lines with a steeper slope reflect a higher cost-effectiveness in terms of MHa and SWB improvements. Each point is a single run of a Monte Carlo simulation for the intervention, mapping the uncertainty around our estimates of the effects and the costs. Although our estimates for psychotherapy and StrongMinds are more uncertain than cash transfers, we still estimate that they are more cost-effective at improving subjective well-being.

Figure 1: Cost-effectiveness of psychotherapy compared to cash transfers

Note: We assume that GiveDirectly CTs are as effective as other lump-CTs, but we think GiveDirectly probably has lower operating costs. This is why we do not display them separately.

2. Cost-effectiveness analyses (CEAs)

2.1 Cash transfers (full analysis)

Cash transfers (CTs) are direct payments made to people living in poverty. They have been extensively studied and implemented in low- and middle-income countries (LMICs) and offer a simple and scalable way to reach people suffering from extreme financial hardship.

Our cash transfers cost-effectiveness analysis (CEA) determines the cost-effectiveness of lump-sum CTs and monthly CTs in LMICs using standard deviation (SD) changes in subjective well-being (SWB) and affective mental health (MHa) . We use ‘affective mental health’ to refer to the class of mental health disorders and measures (e.g. PHQ9, CESD20, GHQ12, and GAD7) that relate to lower levels of affect or mood (depression, distress, and anxiety). Measures of affective mental health differ from measures of SWB because they also include questions that ask about how well someone is functioning such as the quality of someone’s appetite, sleep quality, and concentration. These factors influence, but do not constitute, someone’s mood. This analysis provides us with a well-evidenced benchmark that we can use to compare a wide range of interventions.

Much of our analysis extends a meta-analysis of the effect of cash transfers on SWB and MHa in LMICs by McGuire, Kaiser, and Bach-Mortensen (2020). That paper is the result of a collaboration between HLI researchers and academics at the University of Oxford and has been conditionally accepted for publication by Nature Human Behaviour. We think this meta-analysis summarizes the entire extant literature on how cash transfers affect SWB. Our CEA uses the same data (45 studies, 114,274 individuals in LMICs) and shares some analysis on the effectiveness of cash transfers. It expands on the meta-analysis by adding cost information and estimating the total benefit we expect a recipient of a cash transfer to accrue. Please refer to the meta-analysis for more background on cash transfers and a more thorough description of the evidence base.

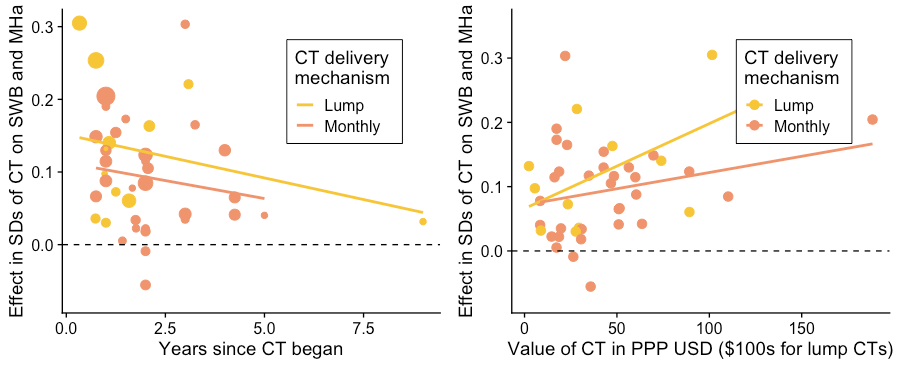

Our models for estimating the effectiveness of lump-sum CTs and monthly CTs are presented in Figure 2 below. The chart on the left shows the relationship between time and the effect on SWB and MHa. As we expect, the effects decay over time. The chart on the right shows the relationship between the value of a CT and the effect on SWB and MHa. Unsurprisingly, the effect is greater for larger CTs (although the data is quite noisy). We haven’t differentiated between the effects on SWB and MHa. Our sample of studies was too underpowered to break down the analyses by both delivery mechanism and measure type (SWB or MHa). But the point estimates suggested that the differences between the measure types were small. For instance, using only SWB measures leads to a 2% and 13% increase in the total effect for monthly and lump CTs. These seemed like sufficient reasons to keep SWB and MHa pooled for our main analysis.

Figure 2: The effect of cash transfers in relation to time and value

Note: These results only display the bivariate relationship between value, time, and the effect.

We present the estimated cost-effectiveness of CTs in Table 1, alongside the per-person effects and per-transfer costs. To get the confidence intervals for the effect and cost we ran Monte Carlo simulations in R where each parameter was drawn from a normal distribution based on its standard error (for regression coefficients) or standard deviations for the cost.

Table 1: Estimated cost-effectiveness of lump-sum CTs and monthly CTs

Cost to deliver $1,000 in CTs | Effect in SDs of well-being per $1,000 in CTs | SDs in well-being per $1,000 spent on CTs | |

Lump-sum CTs (GiveDirectly) | $1,185 ($1,098, $1,261) | 1.09 (0.33, 2.08) | 0.92 (0.28, 1.77) |

Monthly CTs | $1,277 ($1,109, $1,440) | 0.50 (0.22, 0.92) | 0.40 (0.17, 0.75) |

Note: Below the estimate is its 95% confidence interval which was calculated by inputting the regression and cost results into a Monte Carlo simulation.

Notably, the estimated total effect of a $1,000 lump-sum CT provided by GiveDirectly is about twice as large as the effect of $1,000 transferred in monthly increments by (mostly) governments. This seems like a suspiciously large difference at first, since we do not think a priori that giving people of similar means the same sum should have dramatically different effects. Some possible explanations we have considered include:

- Lump-sum CTs can be invested more profitably, leading to higher total consumption and benefit. However, monthly CTs could lead to smoother consumption and that could benefit the recipient.

- Governments in LMICs suffer from corruption, while GiveDirectly is a respected charity known for the strength of its organisation.

- Many government CTs require individuals to travel in-person to a collection point while GiveDirectly CTs are transferred automatically using the mobile banking platform M-Pesa.

- GiveDirectly also provides cell phones and a bank account to recipients without it. Although they take the value of the phone out of the transfer, this could plausibly provide an additional benefit to the recipient.

There are three main limitations to our analysis.

Firstly, the recipient is plausibly not the only person impacted by a cash transfer. They can share it with their partner, children, and even friends or neighbours. Such sharing should benefit non-recipients' well-being. However, it’s also possible that any benefit that non-recipients receive could be offset by envy of their neighbour’s good fortune. There appears to be no evidence of significant negative within-village spillover effects, but there is some evidence for positive within-household and across-village spillover effects. We have not included these spillover effects in our main analysis because of the large uncertainty about the relative magnitude of spillovers across interventions and the slim evidence available to estimate the household spillover effects.

Secondly, we have not extensively reviewed the evidence that explores the mechanisms through which CTs improve the SWB of their recipients. This limits our ability to estimate the effects of income gains through other means, such as deworming. For instance, we have so far found little evidence regarding how much of the benefit of a CT is contingent on recipients' relative versus absolute improvement in material circumstances. Is the benefit due to recipients making beneficial comparisons to those who didn’t receive a CT or is it because of the absolute change in the recipients’ living standards as a result of higher consumption? If the channel for CTs to improve SWB and MHa primarily runs through social comparison, then that would suggest that the effects would be smaller for an intervention that increases everyone's income in an area.

Thirdly, we have sparse data on the long term effects of CTs on SWB. There is only one study that follows up with its recipients after five years. Having long-term follow-ups of CTs is important for understanding the persistence of CTs benefit, and thus the total benefit they provide to their recipients.

2.2 Psychotherapy (full analysis)

Having determined the cost-effectiveness of cash transfers in terms of subjective well-being, we can now conduct a direct comparison with the cost-effectiveness of psychotherapy.

Psychotherapies vary considerably in the strategies they employ to improve mental health, but some common types of psychotherapy are cognitive behavioural therapy (CBT) and interpersonal therapy (IPT). Our analysis does not focus on a particular form of psychotherapy. Previous meta-analyses find mixed evidence supporting the superiority of any one form of psychotherapy for treating depression (Cuijpers et al., 2019).

Instead, we focus our analysis on the average intervention-level cost-effectiveness of any form of face-to-face psychotherapy delivered to groups or by non-specialists deployed in LMICs. As before, we seek to measure the effect as the benefit they provide to subjective well-being (SWB) and affective mental health (MHa).

We extracted data from 39 studies that appeared to be delivered by non-specialists and/or to groups from five meta-analytic sources, and any additional studies we found in our search for the costs of psychotherapy. These studies are not exhaustive. We stopped collecting new studies due to time constraints and our estimation of diminishing returns. We aimed to include all RCTs of psychotherapy with outcome measures of SWB or MHa but only found studies with measures of MHa.

In Table 2, we display our estimated post-treatment effects and how long they last for the average psychotherapy intervention in our sample. The post-treatment effects are estimated to be between 0.342 and 0.611 SDs of MHa. After running further regressions, we found evidence that group psychotherapy is more effective than psychotherapy delivered to individuals which is in line with other meta-analyses (Barkowski et al., 2020; Cuijpers et al., 2019). One explanation for the superiority is that the peer relationships formed in a group provide an additional source of value beyond the patient-therapist relationship.

Table 2: Post-treatment effect and decay through time

Model 1 (linear) | Model 2 (exponential) | |

Effect at post-treatment (SDs of MHa improved) | 0.574 | 0.457 |

95% CI | (0.434, 0.714) | (0.342, 0.611) |

Annual decay of benefits (SDs lost in M1, % kept in M2) | -0.104 | 71.5% |

95% CI | (-0.197 -0.010) | ( 53%, 96.5%) |

Total effect at 5.5 yrs (end of linear model effects) | 1.59 | 1.56 |

Total effect at 10 yrs | 1.59 | 1.78 |

Total effect at 30 yrs | 1.59 | 1.85 |

Before we compare the total effect of psychotherapy to cash transfers, we discount interventions with relatively lower-quality evidence. We estimate that the evidence base for psychotherapy overestimates its efficacy relative to cash transfers by 11% (95% CI: 0%, 40%) because psychotherapy has lower sample sizes on average and fewer unpublished studies, both of which are related to larger effect sizes in meta-analyses.

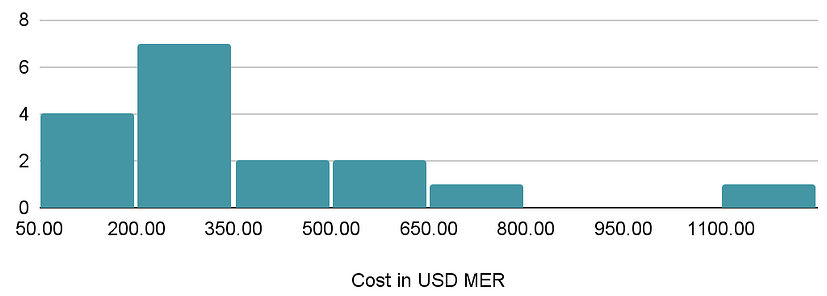

We reviewed 28 sources that estimated the cost of psychotherapy and included 11 in our summary of the costs of delivering psychotherapy (see Figure 3 below). Nearly all are from academic studies except the cost figures for StrongMinds. The cost of treating an additional person with lay-delivered psychotherapy ranges from $50 to $659.

Figure 3: Distribution of the cost of psychotherapy interventions.

Having established estimates for the cost and the effectiveness of psychotherapy, we can now show in Table 3, that psychotherapy is estimated to be 12 times (95% CI: 4, 27) more cost-effective than cash transfers.

Table 3: Comparison of unconditional cash transfers to psychotherapy in LMICs

Cash Transfers | Psychotherapy | |

Total effect on SWB & MHa | 0.50 (0.22, 0.92) | 1.60 (0.68, 3.60) |

Cost per intervention | $1,277 ($1,109, $1,440) | $360 ($30, $631) |

| Cost-effectiveness per $1,000 USD spent | 0.40 SDs (0.17, 0.75) | 4.30 SDs (1.1, 24) |

Note: 95% CIs are presented in parenthesis below the estimate.

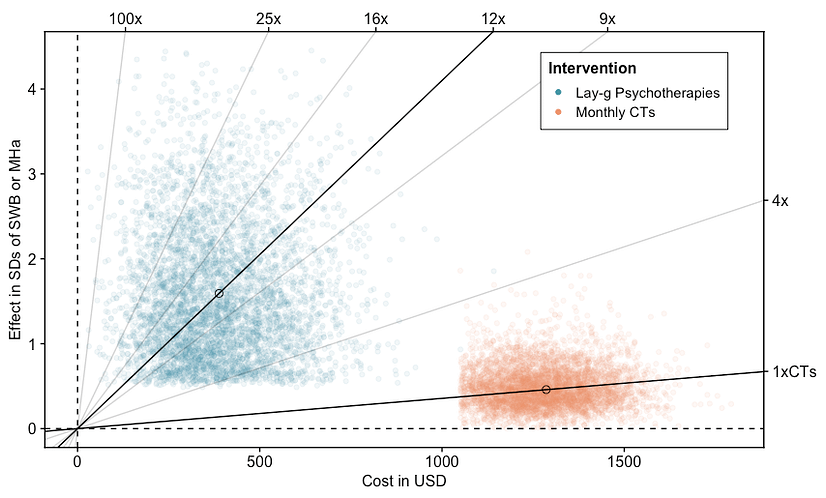

In Figure 4, we take the results in Table 3 and show the results of simulations that compare the cost-effectiveness of each intervention. The total effect is on the y-axis and the total cost is on the x-axis for both interventions. Each point represents a run of a simulation. The higher the slope of the line, the more cost-effective the intervention. What should be clear is that the distributions of cost-effectiveness do not overlap. However, we do not include spillover effects on the household or the community in our comparison. Spillover effects are much more uncertain for reasons we explained earlier.

Figure 4: Cost-effectiveness of psychotherapy compared to cash transfers

The following considerations and limitations are discussed in detail in our full psychotherapy CEA report:

- There may be issues with assuming that a 1 SD improvement in MHa scores is equivalently informative about wellbeing as a 1 SD increase in SWB measures (such as happiness or life satisfaction). For example, changes in MHa may poorly predict changes in SWB. This does not appear to be a concern in practice because the measures seem both highly correlated and to give similar answers in meta-analyses of psychological interventions. That being said, we have not looked into the matter deeply. If using different measures gives different answers, then we would need to establish a conversion rate between MHa and SWB measures. Converting between measures of wellbeing requires both evidence of how these measures relate and judgements on which measures better proxy what really matters. We acknowledge that this is a source of uncertainty that further research could work to reduce.

- The mental health scores for recipients of different programmes might have different-sized standard deviations. For example, the SD could be 15 for cash transfers and 20 for psychotherapy, on a given mental health scale. This would bias our comparisons across interventions.

- Cost data are sparse for psychotherapy. Studies that report costs often make it unclear what their cost encompasses, which makes the comparison of costs across studies less certain.

- Data on the long-term effects of psychotherapy (beyond 2 years) are also very sparse. Given we need to know the total effect over time, this means a key parameter - duration - is estimated with relatively little information.

- We do not incorporate spillover effects of psychotherapy into our main analysis. This may matter if the relative sizes of household spillovers differ substantially between interventions, but at the moment we do not have clear evidence to indicate that they do.

2.3 Strong Minds (full analysis)

Having established that psychotherapy appears to be more cost-effective than cash transfers in terms of subjective well-being, we now need to identify the best donation opportunities for those who wish to direct more financial resources towards this intervention. We will now explain why we think StrongMinds is likely to be one of the best opportunities of this type.

In 2019, HLI began the Mental Health Programme Evaluation Project (MHPEP) to identify the most cost-effective charities delivering psychotherapy interventions in LMICs. The first round of the evaluation began with a review of 76 interventions from the Mental Health Innovation Network, from which we identified 13 priority programmes for further evaluation. Our screening criteria included: whether the intervention is targeted at depression, anxiety or stress disorders in LMICs; whether a controlled trial has been conducted on the programme; and an initial evaluation of cost-effectiveness. You can read more about the process we followed here.

In 2020, we began reaching out to organisations that deliver one of our priority programs. Unfortunately, many of the people we approached did not respond or told us they didn’t have enough time to participate. The only organisation that was on our shortlist and did provide detailed cost information was StrongMinds, a non-profit founded in 2013 that provides group interpersonal psychotherapy (g-IPT) to impoverished women in Uganda and Zambia. As a result, StrongMinds was one of the organisations we were most excited about prior to doing any detailed analysis. Friendship Bench, another promising mental health NGO, has since offered to provide us with their cost data, but we have not been able to review it yet.

Our cost-effectiveness analysis of StrongMinds builds on previous work by Founders Pledge (Halstead et al., 2019) in three ways. First, we combined broader evidence from the psychotherapy literature with direct evidence of StrongMinds’ effectiveness in order to increase the robustness of our estimates. Secondly, we updated the direct evidence of StrongMinds’ costs and effectiveness to reflect the most recent information. Lastly, we designed our analysis to include the cost-effectiveness of all StrongMinds’ programmes. This allows us to compare the impact of a donation to StrongMinds to other interventions, such as unconditional cash transfers delivered by GiveDirectly.

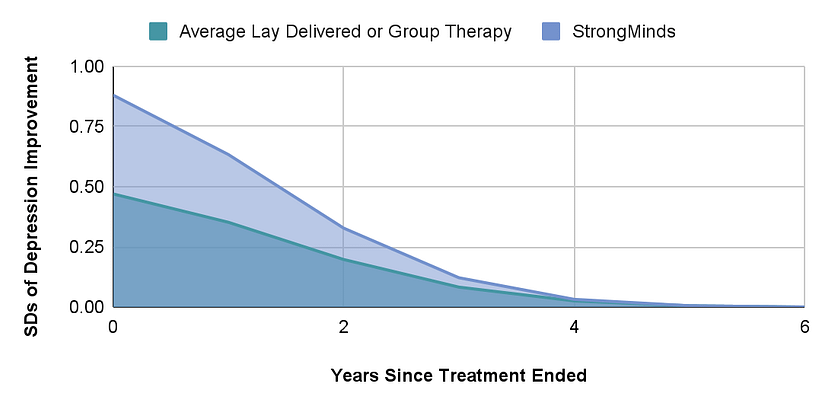

We estimate the total effect of StrongMinds on the individual recipient to be an expected 1.92 SD (95% CI: 1.1, 2.8) improvement in MHa scores. Figure 5 visualises the trajectory of the effects of StrongMinds’ core programme compared to the average lay- or group-delivered psychotherapy through time.

Figure 5: Trajectory of StrongMinds compared to lay psychotherapy in LMICs.

StrongMinds records their average cost of providing treatment to a person and has shared the most recent figures for each programme with us. We assume that StrongMinds can continue to treat people at levels of cost comparable to previous years. It is also worth noting that StrongMinds defines treatment as attending more than six sessions (out of 12) for face-to-face modes and more than four for teletherapy. If we had used the cost per person reached (attended at least one session), then the average cost would decrease substantially.

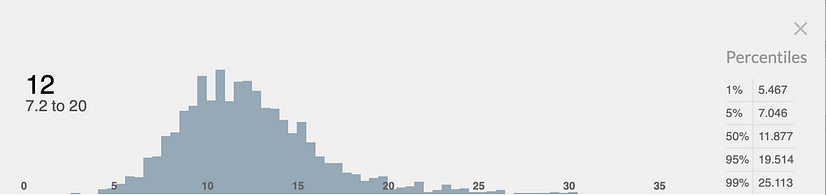

Having incorporated all our uncertainties into a Monte Carlo simulation, we estimate that a $1,000 donation to StrongMinds will result in a 12 SD (95% CI: 7.2, 20) improvement in MHa. This range of uncertainty is similar to that of the core programme (95% CI: 8.2 to 24 SDs). This may sound very large, but we expect that $1,000 will cover the treatment of around seven people. We show the distribution of this estimate in Figure 6 below.

Figure 6: Distribution of overall cost-effectiveness of a $1000 donation to StrongMinds

The following considerations and limitations are discussed in detail in our full StrongMinds CEA report:

- Will StrongMinds’ transition to implementing g-IPT through partners positively impact its cost-effectiveness?

- Do current calculations underestimate StrongMinds’ cost-effectiveness by excluding the effect and cost of those who did not complete treatment?

2.4 Comparison

Figure 7 illustrates a simulation of the comparison between psychotherapy, StrongMinds, monthly cash transfers, and GiveDirectly. Each point is an estimate given by a single run of a Monte Carlo simulation. Lines with a steeper slope reflect a higher cost-effectiveness in terms of SWB and MHa. Bold lines reflect the cost-effectiveness gradient of interventions, while grey lines are for reference. In this comparison, a $1,000 donation to StrongMinds is around 12 times (95% CI: 4, 24) more cost-effective than a comparable donation to GiveDirectly.

Figure 7: Cost-effectiveness of StrongMinds compared to GiveDirectly

A word of caution is warranted when interpreting these results. Our comparison to cash transfers is only based on affective mental health. We were unable to find any studies that used direct measures of SWB to determine the effects of psychotherapy, so it remains to be seen whether the impacts are substantially different when using those measures instead.

Additionally, this comparison only includes the effects on the individual and it is possible that the spillover effects differ considerably between interventions. That being said, even if we take the upper range of GiveDirectly’s total effect on the household of the recipient (8 SDs), psychotherapy is still around twice as cost-effective.

In Table 4 we present the exact figures shown in the comparison of Figure 7. The key takeaway from showing the exact figures we used is to show that this result is driven mostly by the difference in the cost of each intervention, not the effectiveness.

Table 4: StrongMinds compared to GiveDirectly

GiveDirectly lump-sum CTs | StrongMinds psychotherapy | Multiple of StrongMind's to GiveDirectly | |

|---|---|---|---|

| Initial effect | 0.24 | 0.79 | |

(0.11, 0.43) | (0.52, 1.2) | ||

| Effect duration in years | 8.7 | 5.0 | |

(4, 19) | (3, 10) | ||

| Total effect on SWB & MHa | 1.1 | 1.7 | Explains 13% of the difference in c-e |

(0.33, 2.1) | (1.1, 2.8) | ||

| Cost per intervention | $1,185 | $128 | Explains 87% of the difference in c-e |

($1,098, $1,261) | ($70, $300) | ||

| Cost-effectiveness per $1,000 USD | 0.92 | 11.8 | StrongMinds is 12x more cost-effective |

(0.28, 1.8) | (7, 21) | (4, 24) |

Note: 95% confidence intervals are shown below the estimates.

3. Conclusions and next steps

We think these reports show that working out how to do the most good by using subjective well-being is feasible and worthwhile. In particular, we believe that our analysis convincingly demonstrates that high-quality mental health interventions can be as good, or better than, highly-regarded and well-evidenced economic interventions.

Of course, there are many more interventions that need to be analysed in terms of subjective well-being before a fuller picture begins to emerge, but we do want to stress to prospective donors that we consider StrongMinds to be an excellent donation opportunity if your aim is to improve global health and well-being. As of October 2021, they have a funding gap of $6.6 million for 2022. They are also planning for rapid growth (treating 50,000 in 2022, 100,000 in 2023, and 150,000 in 2024) equalling 300,000 women treated over the next three years. To fuel this growth trajectory, they will need to raise $30 million in total over the next three years.

We suspect there are other highly effective donation opportunities waiting to be discovered. We have several avenues to explore and we can do this because our method allows us to compare different outcomes with a single metric. Our research pipeline includes a range of other promising interventions to analyse (conditional on us acquiring the necessary funding). These include Friendship Bench, mental health apps, cataract surgery, cement flooring, drug liberalisation (including better access to opioids and psychedelics) as well as advocacy to integrate well-being metrics in public policy (as a replacement/complement to GDP) and to make employee well-being a central indicator of environmental, social, and corporate governance (ESG) performance.

In addition, there are a number of theoretical questions about the nature and measurement of well-being that require further investigation. For instance, in order to compare StrongMinds to GiveWell’s death-averting recommendations we must first establish the location of the ‘neutral point’ on happiness scales — a vital, but highly contested factor in assessing the cost-effectiveness of life-saving interventions in terms of subjective well-being. For more, see our analysis using subjective well-being to compare life-saving to life-improving interventions. Further research is also required to establish the best methods for converting between different subjective well-being measures. You can learn more about our future plans in our research agenda.

If you found this post valuable, and you would like to support our future research, please consider making a donation to the Happier Lives Institute. We are actively seeking new donors to fund our future research. If you would like to discuss our research further, or are thinking about making a donation, please contact michael@happierlivesinstitute.org.

Credits

This research was produced by the Happier Lives Institute.

If you like our work, please consider subscribing to our newsletter.

You can also follow us on Facebook, Twitter, LinkedIn, and YouTube

This is amazing work! I have a bunch of thoughts, which I'll number so it's easier for you or others to respond to. Sorry that this comment is a bit long; you can respond to the numbers one-by-one instead of all at once if you'd like:

It will likely take some time before GiveWell would be able to make StrongMinds a top charity, but it would be exciting if StrongMinds (or any mental health charity) could make it to GiveWell's list of recommended charities as early as the end of 2022. It would be nice to hear from GiveWell about the following too if they:

Our goal is to find 1-2 charity ideas that are highly cost-effective to implement in the Philippines (and competitive with StrongMinds), and that Charity Entrepreneurship will be willing to incubate in 2022. These reports of yours will likely be very useful for us, which is why I took the time to read this report and browse through some of the others linked here. And I can see how we can build off and learn from this research in various ways. We'll probably email you within the next couple of weeks to schedule a call with you and/or Joel, with more specific questions about HLI's research and to get advice about our project!

This might make sense as a vision if GiveWell doesn't plan on recommending some charities that do well on improving SWB (i.e. StrongMinds). Hopefully GiveWell does though.

Brian, I am glad to see your interest in our work!

1.) We have discussed our work with GiveWell. But we will let them respond :).

2.) We're also excited to wade deeper into deworming. The analysis has opened up a lot of interesting questions.

3.) I’m excited about your search for new charities! Very cool. I would be interested to discuss this further and learn more about this project.

4.) You’re right that in both the case of CTs and psychotherapy we estimate that the effects eventually become zero. We show the trajectory of StrongMinds effects over time in Figure 5. I think you’re asking if we could interpret this as an eventual tendency towards depression relapse. If so, I think you’re correct since most individuals in the studies we summarize are depressed, and relapse seems very common in longitudinal studies. However, it’s worth noting that this is an average. Some people may never relapse after treatment and some may simply receive no effect.

5.) I'll message you privately about this for the time being.

6.) In general we hope to get more people to make decisions using SWB.

7.) I am going to pass the buck on making a comment on this :P. This decision will depend heavily on your view of the badness of death for the person dying and if the world is over or underpopulated. We discuss this a bit more in our moral weights piece. In my (admittedly limited) understanding, the goodness of improving the wellbeing of presently existing people is less sensitive to the philosophical view you take.

Thanks for the flag, Joel.

Brian, our team is working on our own reports on how we view interpersonal group therapy interventions and subjective well-being measures more generally. We expect to publish our reports within the next 3-6 months.

We have spoken to HLI about their work, and HLI has given us feedback on our reports. It’s been really helpful to discuss this topic with Michael, Joel, and the team at HLI. Their work has provided some updates to how we view this topic, even if we do not ultimately end up reaching the same conclusions.

We’re still looking into this area and some of the important questions HLI has raised. While we plan to provide a more detailed view once our reports are published, a few areas where we differ from HLI are below:

We still have a lot of uncertainty about how to compare different interventions like cash transfers and therapy, and making these comparisons is crucial to our decisions on what funding opportunities to recommend to our donors. As a result, we hope to continue to discuss this topic with individuals who have a differing view than us on our moral weights so that we can continue to refine our approach.

We look forward to engaging once we publish a fully vettable report. Until then, I hope this answers the immediate questions you have about where the views of GiveWell and HLI differ.

Before I respond to the details, I’d like to thank GiveWell for engaging with these questions. I’m delighted our research has led to them producing their own reports into group psychotherapy and using subjective wellbeing to determine one's moral weights.

GiveWell kindly shared a draft of their reply with us in advance and we made several comments clarifying our position. However, they decided to publish their original draft unchanged (without offering a further explanation) so we're restating our comments here so that readers can build a better understanding of where our positions differ. I’ll split these up so it’s easier to follow, first quoting the response from GiveWell, then providing our reply.

To clarify, the intervention is not to provide therapy to anyone, it's just to provide it to those who are depressed. I expect that even some depressed people would choose cash over therapy. But it's reasonable to assume people don't always know what's best for them and under/overconsume on certain goods due to lack of information, etc. That's why we need studies to see what truly improves people's subjective well-being.

If one was serious about always giving people what they choose, then you would just give people cash and let them decide. Given that GiveWell claims that bednets and deworming are better than cash, it seems they already accept cash is not necessarily best. Hence, it’s unclear how they could raise this as a problem for therapy without being inconsistent.

What I think might have been overlooked here is that therapy is only being given to people diagnosed with mental illnesses, but the cash transfers go to poor people in general (only some of whom will be depressed). Hence, it's perhaps not so surprising that directly treating the depression of depressed people is more impactful than giving out money (even if those people are poor). If you were in pain but also poor, no one would assume that giving you money would do more for your happiness than morphine would.

We account for this trial in our meta-analysis - if we hadn’t incorporated it, therapy would look even a bit more cost-effective. Of course, the point of meta-analyses is to look at the whole evidence base, rather than just selecting one or two pieces of evidence; one could discount any meta-analysis this way by pointing to the trial with the lowest effect.

We don’t think one study should overshadow the results of a meta-analysis, which aggregates a much wider set of data ("beware the man of only one study" etc). If there was one study finding no impact of bednets, I doubt GiveWell would conclude it would be reasonable to discount all the previous data on bednets.

What is the conversion rate here between DALYs and income increases, and on what is it based? I'm not sure what method could be being used here except by inputting one's intuitions. In which case, it would be good to make that clear, as people may think the conversation rate is an authoritative fact, rather than (just) an opinion. It would be interesting to state how much readers' opinions would need to differ from GiveWell’s to reach alternative conclusions!

To bang a familiar drum, the reason to use subjective wellbeing measures is that we can observe how much health and income changes improve wellbeing, rather than having to guess.

It's not easy to respond to this - it's not stated what the limitations and other factors are.

More generally, there's no reason to think in the abstract that, if you're pluralist rather than monist about value, this changes the relative cost-effectiveness ranking of different actions. You'd need to provide a specific argument about what the different values are, how each intervention relatively does on this, and how the units of value are commensurate.

For example, imagine a scenario where intervention X provides 15 units of happiness/$ but does nothing for autonomy and intervention Y provides 10 units of happiness/$ and 10 units of autonomy/$. If we take one unit of happiness as being as valuable as one unit of autonomy, then Y is better than X. However, someone who only valued happiness would think X is better.

It would be helpful if GiveWell could share what their current best guess is. Even if spillovers are 30% for therapy and 100% for cash, assuming the original 12x multiple and 3 other household members, then the multiple would still be 5.7.

Cash = 1 + (1*3) = 4

Psychotherapy = 12 + ((0.3*12)*3) = 12 + 10.8 = 22.8

Hence, therapy still looks quite a bit better even if the spillover effects are small.

This is definitely not what we think, particularly the assumption it will be proportional across 'any' intervention! I'm sure why someone would believe that.

Our position, as outlined in this twitter thread, is quite a bit more nuanced. There wasn't much evidence we could find on household spillovers - five studies for cash, one for mental health - and in each case it indicated very large spillover effects, i.e. in the range that other household members got 70-100% of the benefitted the recipient did. We didn't include that in the final estimate because there was so little evidence and, if we'd taken it at face value, it would only have modestly changed the results (making therapy 8-10x better). Even in the extreme, and implausible, case where therapy has no household spillovers, it wouldn't have yielded the result that psychotherapy is more cost-effective than cash transfers. We discussed this in the individual cost-effectiveness analysis reports and flagged it as something to come back to for further research.

We agree that the effects of household spillovers from cash are large. Where our priors may diverge is that HLI (and others) think that the spillovers from therapy are also large, whereas GiveWell is very sceptical about this. We are now conducting a thorough search for more evidence on household spillovers, so we are not just swapping priors.

We have published an updated cost-effectiveness comparison of psychotherapy and cash transfers to include an estimate of the effects on other household members. You can read a summary here.

For cash transfers, we estimate that each household member experiences 86% of the benefits experienced by the recipient. For psychotherapy, we estimate the spillover ratio to be 53%.

After including the household spillover effects, we estimate that StrongMinds is 9 times more cost-effective than GiveDirectly (a slight reduction from 12 times in our previous analysis).

There is much to be admired in this report, and I don't find it intuitively implausible that mental health interventions are several times more cost-effective than cash transfers in terms of wellbeing (which I also agree is probably what matters most). That said, I have several concerns/questions about certain aspects of the methodology, most of which have already been raised by others. Here are just a few of them, in roughly ascending order of importance:

My out-of-date notes:

Topic 2.2: (Re-)prioritising causes and interventions

[…]

GiveWell

[…]

Spillover effects

Secondly, there are also potential issues with ‘spillover effects’ of increased consumption, i.e. the impact on people other than the beneficiaries. This is particularly relevant to GiveDirectly, which provides unconditional cash transfers; but consumption is also, according to GiveWell’s model, the key outcome of deworming (Deworm the World, Sightsavers, the END Fund) and vitamin A supplementation (Hellen Keller International). Evidence from multiple contexts suggests that, to some extent, the psychological benefits of wealth are relative: increasing one person’s income improves their SWB, but this is at least partly offset by decreases in the SWB of others in the community, particularly on measures of life satisfaction (e.g. Clark, 2017). If increasing overall wellbeing is the ultimate aim, it seems important to factor these ‘side-effects’ into the cost-effectiveness analysis.

As usual, GiveWell provides a sensible discussion of the relevant evidence. However, it is somewhat out of date and does not fully report the findings most relevant to SWB, so I’ve provided a summary of wellbeing outcomes from the four most relevant papers in Appendix 2.1. In brief:

As GiveWell notes, it is hard to aggregate the evidence on spillovers (psychological and otherwise) because of:

Like GiveWell, I suspect the adverse happiness spillovers from GiveDirectly’s current program are fairly small. In order of importance, these are the three main reasons:

In addition, any psychological harm seems to be primarily to life satisfaction rather than hedonic states. As noted in Haushofer, Reisinger, & Shapiro (2019): “This result is intuitive: the wealth of one’s neighbors may plausibly affect one’s overall assessment of life, but have little effect on how many positive emotional experiences one encounters in everyday life. This result complements existing distinctions between these different facets of well-being, e.g. the finding that hedonic well-being has a “satiation point” in income, whereas evaluative well-being may not (Kahneman and Deaton, 2010).” This is reassuring for those of us who tend to think feelings ultimately matter more than cognitive evaluations.

Nevertheless, I’m not extremely confident in the net wellbeing impact of GiveDirectly.

A few more notes on interpreting the wellbeing effects of GiveDirectly:

In addition, I would note that the other income-boosting charities reviewed by GiveWell could potentially cause negative psychological spillovers. According to GiveWell’s model, the primary benefit of deworming and vitamin A supplementation is increased earnings later in life, yet no adjustment is made for any adverse effects this could have on other members of the community. As far as I can tell, the issue has not been discussed at all. Perhaps this is because these more ‘natural’ boosts to consumption are considered less likely to impinge on neighbours’ wellbeing than windfalls such as large cash transfers. But I’d like to see this justified using the available evidence.

I make some brief suggestions for improving assessment of psychological spillover effects in the “potential solutions” subsection below.

Appendix 2.1

Four studies investigated psychological impacts of GiveDirectly transfers. Two of these found wellbeing gains for cash recipients (“treatment effects”) and only null or positive psychological spillovers:

However, two studies are more concerning:

Note: GiveWell’s review of an earlier version of the paper reports a “statistically significant negative effect on an index of psychological well-being that is larger than the short-term positive effect that the study finds for receiving a transfer, but the negative effect becomes smaller and non-statistically significant when including data from the full 15 months of follow-up… The authors interpret these results as implying that cash transfers have a negative effect on well-being that fades over time.” I’m not sure why the authors removed those analyses from the final version.

Hi Derek, it’s good to hear from you, and I appreciate your detailed comments. You suggest several features we should consider in our following intervention comparison and version of these analyses. I think trying to test the robustness of our results to more fundamental assumptions is where we are likeliest to see our uncertainty expand. But I moderately disagree that this is straightforward to adapt our model to. I’ll address your points in turn.

Thanks for the reply. I don't have much more time to think about this at the moment, but some quick thoughts:

This paper, the Drummond book above, and this book are good starting points if you want to learn how to do cost-effectiveness analysis (including sensitivity analysis).

A couple nitpicks:

Hi Derek, thank you for your comment and for clarifying a few things.

I strongly agree with Derek's point about measuring the nonmonetary costs to the recipients and their families. If your benefits are driven mainly by the differences in costs, then omitting potentially relevant costs can invalidate the entire analysis. You absolutely must account for the time that recipients spent in the program, and traveling to and from the program, and any other money or time costs that they or their families incurred as a result of program participation. At minimum, this time should be valued at the local wage rate. Until this is addressed, I will assume that your analysis is junk, and say so to anyone who asks me about it.

I haven't read the debate closely, but people who like this post would probably be interested in the authors' Twitter conversation about this research with Alexander Berger (head of global health and wellbeing at Open Philanthropy).

On Twitter, Michael Plant wrote:

I think the right thing to do here (besides further research) would be to give less weight to the psychotherapy spillover estimate and adjust the effect downwards (or at least more downwards than CTs' spillover, which has evidence from multiple studies, presumably some better designed and with larger sample sizes), based on a skeptical prior.

PT=psychotherapy, CT=cash transfers

PT= 12 x CT without spillovers

CT' = 4 x CT with spillovers

PT' = (1+3s) x PT with spillovers, where s=spillover effect for psychotherapy

PT'= ((1+3s)/4) * 12 x CT' = 3(1+3s) x CT'.

The worst case, s=0, has PT'=3 x CT'. With s=0.25=25%, PT'=5.25 x CT', and with s=0.5=50%, PT'=7.5 x CT'.

As a result of Alexander's feedback, we’ve updated our cost-effectiveness comparison of psychotherapy and cash transfers to include an estimate of the effects on other household members. Our previous analysis only considered the effects on recipients.

You can read a summary of our new analysis here.

For cash transfers, we estimate that each household member experiences 86% of the benefits experienced by the recipient. For psychotherapy, we estimate the spillover ratio to be 53%.

After including the household spillover effects, we estimate that StrongMinds is 9 times more cost-effective than GiveDirectly (a slight reduction from 12 times in our previous analysis).

This new analysis of household effects is based on a small number of studies, eight for cash transfers and three for psychotherapy. The lack of data on household effects is a serious gap in the literature that should be addressed by further research because it is such a large part - indeed, the majority - of the total effects. The significance of household effects seems plausibly crucial for many interventions, such as poverty alleviation programmes, housing improvement interventions, and air or water quality improvements.

How does the meta-analysis avoid the garbage-in-garbage-out problem? Are you simply averaging across studies, or do you weight by study quality (eg. sample size, being pre-registered, etc)? Did you consider replicating the individual studies?

Do you worry about effect sizes decreasing as StrongerMinds scales up? Eg. they start targeting a different population where therapy has smaller effects.

One quibble: "post-treatment effect" sounds weird, I would just call it a "treatment effect".

I share this concern. I don't have much of a baseline on how much meta-analysis overstated effect sizes, but I suspect it is substantial.

One comparison I do know about: as of about 2018, the average effect size of unusually careful studies funded by the EEF (https://educationendowmentfoundation.org.uk/projects-and-evaluation/projects) was 0.08, while the mean of meta-analytic effect sizes overall was allegedly 0.40(https://visible-learning.org/hattie-ranking-influences-effect-sizes-learning-achievement/), suggesting that meta analysis in that field on average yields effect sizes about five times higher than is realistic.

The point is, these concerns cannot be dealt with simply by suggesting that they won't make enough difference to change the headline result; in fact they could.

If this issue was addressed in the research discussed here, it's not obvious to me how it was done.

Give well rated the evidence of impact for GiveDirectly "Exceptionally strong", though it's not clear exactly what this means with regard to the credibility of studies that estimate the size of the effect of cash transfers on wellbeing (https://www.givewell.org/charities/top-charities#cash). Nevertheless, if a charity was being penalized in such comparisons for doing rigorous research, then I would expect to see assessments like "strong evidence, lower effect size", which is what we see here.

Hi Michael,

I try to avoid avoid the problem by discounting the average effect of psychotherapy. The point isn’t to try and find the “true effect”. The goal is to adjust for the risk of bias present in psychotherapy’s evidence base relative to the evidence base of cash transfers. We judge the CTs evidence to be higher quality. Psychotherapy has lower sample sizes on average and fewer unpublished studies, both of which are related to larger effect sizes in meta-analyses (MetaPsy, 2020; Vivalt, 2020, Dechartres et al., 2018 ;Slavin et al., 2016). FWIW I discuss this more in appendix C of the psychotherapy report.

I should note that I think the tool I use needs development. This issue of detecting and adjusting for the bias present in a study is a more general issue in social science.

I do worry about the effect sizes decreasing, but the hope is that the cost will drop to a greater degree as StrongMinds scales up.

We say "post-treatment effect" because it makes it clear the time point we are discussing. "Treatment effect" could refer either to the post-treatment effect or to the total effect of psychotherapy, where the total effect is the decision-relevant effect.

Great work! :) Very happy to see the increase in rigour over earlier estimates. If your research is correct (and, in my casual reading of it, I can find no reason why it wouldn't be) this opens up a whole new area of funding opportunities in the global health & wellbeing space!

I'm also excited about the rest of your research agenda. It seems very ambitious ;)

Some things I find interesting:

"we found evidence that group psychotherapy is more effective than psychotherapy delivered to individuals which is in line with other meta-analyses (Barkowski et al., 2020; Cuijpers et al., 2019). One explanation for the superiority is that the peer relationships formed in a group provide an additional source of value beyond the patient-therapist relationship." --> I did not expect group therapy to be more effective. Instead I expected it to be less effective per person, but more cost effective in total. This is great news.

I am also surprised by the extremely low cost of lay therapy. Is there any correlation between the effectiveness of lay therapy and its cost? I can imagine training costing money but increasing effectiveness.

Most charities not responding/willing to share their costs is .. maybe not so surprising? Let's hope that changes if/when StrongMinds gets a bunch of funding, and you develop your reputation!

Last question: what's HLI's current funding situation? (Current funding, room for funding in different growth scenarios)

Our funding situation is, um, "actively seeking new donors"! We haven't yet filled our budget for 2022.

Our gap up to the end 2022 on our lean budget is £120k; that's the minimum we need to 'keep the lights on'.

Our growth budget, the gap to the of 2022 is probably £300k; I'm not sure we could efficiently scale up much faster than that. (But if someone insisted on giving me more than that, I would have a good go!)

Hi Michael, has the funding situation of the HLI changed in the last three months?

I'm especially interested to know if this analysis and the discussion around it brought new funds.

Also, are financial statements for 2020 and 2021 from the HLI available somewhere by any chance?

I'm thinking about directing there some of my donations for 2022

Hello Lorenzo,

Sorry for the slow reply on this - I've been taking a bit of a break from the EA forum.

I'm pleased to report the funding situation at HLI has substantially improved on the back of our new research. We've raised enough money that we've switched to our 'growth' budget (most of the difference between 'lean' and 'growth' is hiring 2 new staff). However, we are still $50k short of funding for our growth budget for 2022 and donations would be welcome! Feel free to reach out to me privately too.

I'll talk to our fiscal sponsor, PPF and find out about the financial statements.

Hi Michael,

You replied in less than a week, I consider it a fast reply, nothing to be sorry about!

I'm so very glad to hear that the funding situation of the HLI has improved, I think the work you're doing is really important and something that was/is sorely missing when trying to maximize and align impact.

On a personal note, I'll take this opportunity to mention that I find your posts and comments on this forum very valuable.

As someone ignorant about philosophy and happiness research, that's how I was introduced to important topics like population ethics and WELLBYs. It helped me understand much better my intuitive uneasiness about GiveWell's values and cleared up a lot of internal confusion.

Seeing a different perspective in what sometimes seems a bit of a monoculture (EA short-term interventions to improve human lives), and seeing the importance of SWB being relentlessly pushed for years was very enlightening and inspiring.

So thank you for all your amazing work!

Hi Siebe, thank you for the kind words! We agree that using SWB could help us find new opportunities! We’re excited to explore more of this area.

I was also surprised by the things you mention, but I think they make sense on reflection. I can share more of my reasoning if you'd like (but I'm unsure if that's what you were asking for).

We don’t have enough information to estimate the relationship between cost and effectiveness, but this is an interesting question! The issue is that we lack studies that contain both the effects and the costs of psychotherapy. However, we should be able to get cost information from another psychotherapy NGO operating in LMICs, so we hope to analyze that too.

I will let Michael comment on the funding situation!

I may be misremembering, but doesn't GiveDirectly give to whole villages at a time, anyway, making negative spillover very unlikely? If that's the case, it seems like all of the spillover effects should be positive (in expectation).

Do you have any thoughts on how the spillover effects of these interventions might compare, and is there any interest in looking further into this? Mental health interventions may also improve productivity (and so increase income), and people's mental health can affect others (especially family, and parents' mental health on children in particular) in important ways. On the other hand, people build wealth (and other resources, including human capital) within their communities, and cash transfers/deworming could facilitate this, but this may happen over longer time scales.

I would guess the effects on SWB through increased income for the direct beneficiaries of StrongMinds are already included in the measurements of effects on SWB, assuming the research participants were similar demographically (including in income, importantly) as the beneficiaries of StrongMinds.

EDIT: Saw this in your post:

Hi Michael and thank you for your comments and engaging with our work!

To my understanding, GiveDirectly gives cash transfers to everyone in a village who is eligible. GiveWell says this means almost everyone in a village receives CTs in Kenya and Uganda but not Rwanda (note that GiveDirectly no longer works in Uganda). So it seems like negative spillovers are still possible. However, I think you’re still right that it makes negative spillovers less likely.

It’s tough to say how the (intra-household) spillovers compare. I guess that CTs could provide a bit more benefit to the household than psychotherapy, but I am very uncertain about this.

My thinking is that household spillovers are at least what your family gets for having you be happier. As you say, “people's mental health can affect others (especially family, and parents' mental health on children in particular) in important ways.” I expect this to be about balanced across interventions. Then there are the other benefits, which I think will mostly be pecuniary. In this case, it seems like cash transfers if shared, will boost the household’s consumption more than psychotherapy. Again, we are quite uncertain about how spillovers compare across interventions, but it seems important to figure out what’s going on at least within the household. I can go into more detail if you’d like.

We are very interested in looking further into how the spillover effects of these interventions might compare, particularly intra-household spillovers. But as you might guess, the existing evidence is very slim. To advance the question we need to either wait for more primary research to be done or ask researchers for their data and do the analysis ourselves. We will revisit this topic after we’ve looked into other interventions.

I think that’s pretty much correct!

How would the possibility of scale norming with life satisfaction scores (using the scales differently across people or over time, in possibly predictable ways) affect these results? There's a recent paper on this, and also an attempt to correct for this here (video here). (I haven't read any of these myself; just the abstracts.)

(Disclosure: I’m the author of the second linked paper, board member of HLI, and a collaborator on some of its research.)

Hi Michael!

In my paper on scale use, I generally find that people who become more satisfied tend to also become more stringent in the way they report their satisfaction (i.e., for a given satisfaction level, they report a lower number). As a consequence, effects tend to be underestimated.

If effects are underestimated by the same amount across different variables/treatments, scale norming is not an issue (apart from costing us statistical power). However, in the context of this post, if (say) the change in reporting behaviour is stronger for cash-transfers than for psychotherapy, then cash-transfers will seem relatively less cost-effective than psychotherapy .

To assess whether this is indeed a problem, we’d either need data on so-called vignettes (link), or people’s assessment of their past wellbeing. Unfortunately, as far as I know, this data does not currently exist.

That being said, in my paper (which is based on a sample from the UK), I find that accounting for changes in scale use does not, compared to the other included variables, result in statistically significantly larger associations between income and satisfaction.

This Twitter thread from economist Chris Blattman, who "spent the last 15 years studying cash and also CBT", is an interesting response to the Vox article based on this study. An excerpt:

Do you have any plans to look into the welfare benefits from GiveWell's life-saving charities to those who would otherwise lose loved ones (mostly parents losing their children)?

Yes! We've looked into this a bit already in our report on comparing the value of doubling consumption to saving the life of a child using SWB. We plan to revisit and expand on this work.

I recently looked into strongminds „research“ and their findings. I was extremely dissapointed by the low standards. It seemed like they simply wanted to make up super-good numbers. Their results are extremely unrealistic. Are there new results from proper research?

I'm interested in reading critiques of StrongMinds' research, but downvoted this comment because I didn't find it very helpful or constructive. Would you mind saying a bit more about why you think their standards are low, and the evidence that led you to believe they are "making up" numbers?

They did not have a placebo-receiving control group. For example some kind of unstructured talking-group etc. Ideally an intervention known as „useless“ but sounding plausible. So we do not know, which effects are due to regression to the middle, social desirable answers etc. This is basically enough to make their research rather useless. And proper control groups are common for quiete a while.

No „real“ evaluation of the results. Only depending on what their patients said, but not checking, if this is correct (children going to school more often…). Not even for a subgroup.

They had the impression, that patients answered in a social desirable way - and adressed that problem completely inadequate. Arguing social desirable answers would happen only at the end of the treatment, but not near the end of the treatment. ?! So they simply took near-end numbers for granted. ?!

If their depression treatment is as good as they claim, then it is magnitudes better, than ALL common treatments in high-income countries. And much cheaper. And faster. And with less specialized instuctors… ?! And did they invent something new? Nope. They took an already existing treatment - and now it works SO much better? This seems implausible to me.

As far as I know SoGive is reviewing strongminds research. They should be able to back (or reject) my comments here.

All the other points you mentioned seem very relevant, but I somewhat disagree with the importance of a placebo control group, when it comes to estimating counterfactual impact. If the control group is assigned to standard of care, they will know they are receiving no treatment and thus not experience any placebo effects (but unlike you write, regression-to-the-mean is still expected in that group), while the treatment group experiences placebo+"real effect from treatment". This makes it difficult to do causal attribution (placebo vs treatment), but otoh it is exactly what happens in real life when the intervention is rolled out!

If there is no group psychotherapy, the would-be patients receive standard of care, so they will not experience the placebo effect either. Thus a non-placebo design is estimating precisely what we are considering doing in real life: give an intervention to people, who will know that they are being treated and who would just have received standard of care (in the context of Uganda, this presumably means receiving nothing?).

Ofc, there are issues with blinding the evaluators; whether StrongMinds has done so is unclear to me. All of your other points seem fairly strong though.

Thx for commenting. I have to agree with you and disagree somewhat with my earlier comment. (#placebo). Actually placebo-effects are fine and if a placebo helps people: Great!

And yes, getting a specific treatment effect + the placebo-effect is better (and more like in real life), than getting no treatment at all.

"Still: I thought it be good to make this comment right now, so people see my opinion."

I think it would have been better to wait until you had time to give proper arguments for your views. I agree with Stephen that the above comment wasn't helpful or constructive.

I think the follow up is much more helpful, but I found the original helpful too. I think it may be possible to say the same content less rudely, but "I think strong minds research is poor" is still a useful comment to me.

I disagree. I should also say that the follow up looked very different when I commented on it; it was extensively edited after I had commented.

Please don´t get me wrong. I do not like the research from strongminds for the above mentioned reasons (I am sure nobody got me wrong on this). And for some other reasons. But that does mean, that their therapy-work is bad or inefficient. Even if they overestimate their effects by a factor of 4 (it might be 20, it might be 2 - I just made those numbers up) it would still be very valuable work.

I think that somewhere there is "placebo's effect" involved. People may think something is helpful but it is not.

Just recently have read the https://www.health.harvard.edu/mental-health/the-power-of-the-placebo-effect article about it. A bit shocked to be honest

P.S. I do not want to offend anybody.